Do you want to use Cline with SAP AI Core?

Do you want to use ChatWise/Claude Code/Cherry Studio/Lobe Chat with SAP AI Core?

Then try the SAP AI Core LLM Proxy Server

This project establishes a proxy server to …

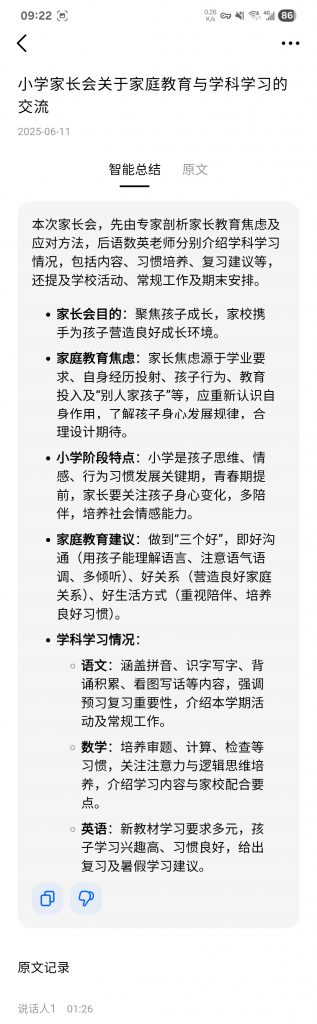

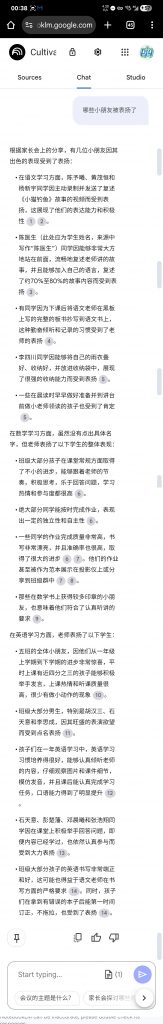

昨天参加了小朋友小学一年级下的家长会,家长会共两个小时。

出于无聊,打开了手机录音,然后才有了下面这些偿试

整个过程很丝滑, NotebookLM果然是最强AI应用之一,生成的思维导图很准确,播客音频对话抓住整个家长会的重点,瞬间就有了一个家长会的助手,可以访谈任何内容。

退一步海阔天空,让三分烟消云散.

世人皆晓长空好,何不摒弃杂念,尽情展翅,放手一搏,天地之间无所顾虑.

一失足成千古恨,再回头已百年身.

走好自己的每一步.

一切景语皆情语,用心感受你所能触听到一切.

一切皆缘,由线系之.

勿忘慈母手中线,游子身上衣,此其中一也.

水给人以生命.

天寄物以梦想.

一乃万物天端.

线永连着你我.

故有我水天一线.…

原文见

https://mp.weixin.qq.com/s/H4Xd8H51SVNhgF_x-e-aOw

根据您输入的文字内容{输入文字},系统将智能分析其情感、风格和深层内涵,从我们多元化的风格集合中匹配或融合最契合的设计元素,为您定制专属设计语言提示词。

光韵夜影: 轮廓式霓虹勾勒,线条飘逸纤细,弧度自然,呈现透明质感与内部发光效果

工业质朴: 金属粗砺纹理,铁锈斑驳装饰,浮雕立体结构,机械工艺感,点缀铆钉细节

童趣涂绘: 线条随性不拘,手绘自然质感,笔触欢快灵动,边缘圆润,多彩渐变装饰

甜心风潮: 梦幻少女气息,圆弧柔和字形,融入泡泡糖果元素,字体可爱圆润,点缀星星心形

动漫爆炸: 漫画式震撼效果,线条张力延伸,笔画放射状爆发,营造强烈视觉冲击

科技方块: 结构对比鲜明、几何分割重组,排列规整,未来科技感强烈

清逸笔迹: 手写风格自然舒展,线条均衡流畅,微妙拖尾效果,构造带几何美感,起收笔干净利落

文艺钢笔: 优雅连笔设计,细线交错变化,双行排布结构,字体飘逸富有情感

金属科幻: 机械边缘结合流线设计,霓虹点缀装饰,转角锐利分明,金属质感突出,融入编码芯片图案

虚拟空间: 深色背景衬托,字体结构数字解构风格,切割感线条,创造未来科技视觉体验

复刻年代: 字体厚重带颗粒感,老式印刷机效果,墨色不均匀,边缘微损,浓厚怀旧气息

狂放书艺: 草书奔放风格,飞白技法应用,节奏富有变化,笔触刚劲有力

西方古典: 哥特风格变体,垂直比例修长,字形笔直尖锐,装饰细节丰富,透露神秘庄重氛围

动感秀逸: 笔触由粗到细,结构紧密流畅,飞白技法明显,强对比突显字体动态美感

解构粗笔: 夸张多变笔触,自由连笔技法,结构错位变形,展现活力张扬跳脱视觉效果

简约留白: 极致纤细无衬线设计,留白空间充足,字距呼吸感十足,现代日式构成理念

行草题韵: 毛笔行书韵味,结构丰满均衡,起笔有力果断,传统标题设计风范

冰晶破裂: …

Here it shows how we build the LLM Agent with Google ADK(Agent Development Kit), and we integrate it with our own models.

Follow the quick start to setup the project

And the project structure will be like

tree

.

Here is one simple demo about using browser-use to automate the Web tasks

from langchain_openai import ChatOpenAI

from browser_use import Agent

import asyncio

from dotenv import load_dotenv

load_dotenv()

async def main():

agent = Agent(

task="Summarize all my tasks in LLM FineTune Tools

Mobile App testing AI Agent

Android AI Agent Evaluation

Fofa – Search “app=ollama”

bolt.new

Windsurf

llms.txt

Vercel v0

Vercel Snake Game

https://lmarena.ai

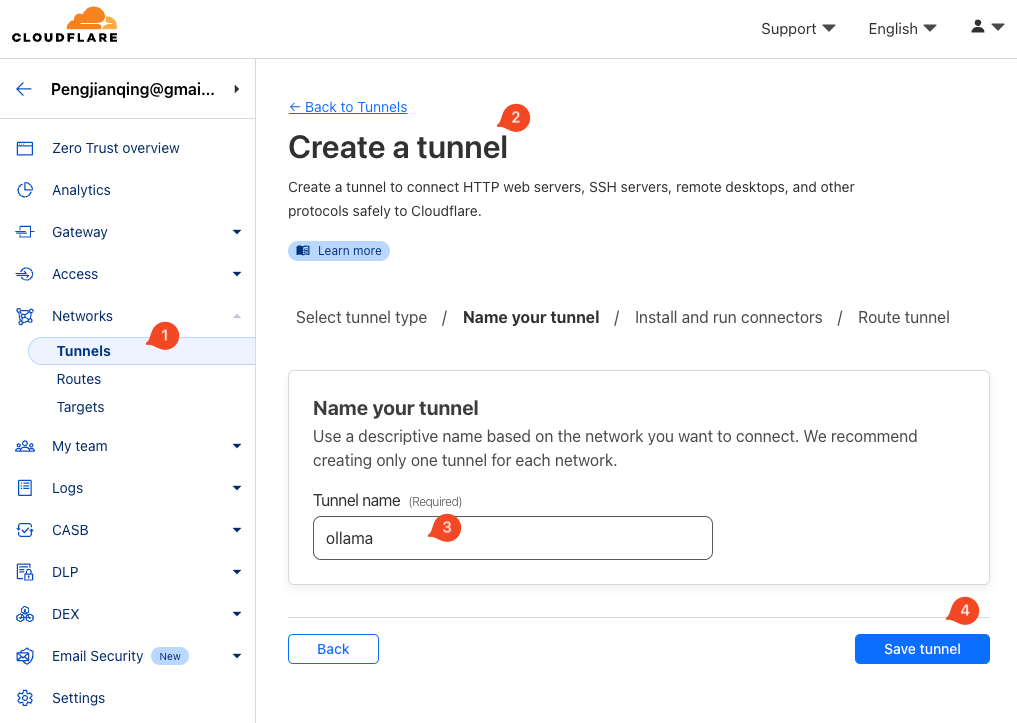

First create one Tunnel in the Web Dashboard

Then install the cloudflared on Mac

brew install cloudflared &&

sudo cloudflared service install eyJhIjoiNGNkZWY4xxxxx.......After you finished the cloudflared installation, then you …

The DeepSeek just released the DeepSeek R1, and it’s Open Source, so let’s running it locally with ollama

First, I got one Mac Pro

Install the monitor tools

09总结|2010年终总结|2011年终总结|2012年终总结|2013年终总结|2014年终总结|2015年终总结|2016年终总结|2017年终总结|2018年终总结|2019年终总结|再见2020|2021年终总结|2022年终总结|2023年终总结

2024年,就要这么匆匆的过去了,不带走一片云彩。

十年前大家都憧憬着未来会越来越好,而现在好像世界都已经完全换了一样,现实会在不经意间,蹦到你的眼前,时刻提醒着你,那些曾经的,期待的,希望的都已远去,消逝在渐行渐远的暮霭中。

数了一下,有的没的写了15篇博客,AI爆发第二年,坚持手敲博客,作为人类RPC最后的倔强。

我想我会一直坚持手写博客,就好像我一直坚持维护着个个独立博客网站运行,想写什么就写什么,没人会审查,十几年了一直坚持着,成本很高,但很值得。

相反,微信视频号仍然处于封禁状态,你不知道哪个视频就会触发了敏感词,就好像包子,维尼熊,不知道哪一天就成了禁忌。

如果说今年有什么好玩的,那就是密码学了。花了一些时间学习各种加密算法,对称加秘,非对称加密,密钥交换,椭圆曲线,离散对数,现在也只是了解一些皮毛。

作为公司新任密码学课程讲师,第一次完成了三天的密码学课程培训。

路漫漫,还有很多东西要去学。密码学作为信息安全里面最重要的一环,涉及到信息安全的方方面面,作为现代互联网的基石,深入了解很有必要,而且这个东西目前是不太会变化的,可以作为基础知识去深入了解。当然最近Google发布的量子计算最新进展,对现代密码学已经产生了一些潜在的威胁。

美国NIST一直在为后量子计算机时代的加密算法在世界范围内,征集加密算法,在今年8月份已经有了最终轮的一些算法,感兴趣可以看看看…